LEARNING OPTIMAL THRESHOLD FOR BAYESIAN POSTERIOR PROBABILITIES TO MITIGATE THE CLASS IMBALANCE PROBLEM

Author affiliations

DOI:

https://doi.org/10.15625/0866-708X/48/4/1155Abstract

ABSTRACT

Class imbalance is one of the problems which degrade the classifier's performance. Researchers have introduced many methods to tackle this problem including pre-processing, internal classifier processing, and post-processing– which mainly relies on posterior probabilities. Bayesian Network (BN) is known as a classifier which produces good posterior probabilities. This study proposes two methods which utilize Bayesian posterior probabilities to deal with imbalanced data.

In the first method, we optimize the threshold on the posterior probabilities produced by BNs to maximize the F1-Measure. Once the optimal threshold is found, we use it for the final classification. We investigate this method on several Bayesian classifiers such as Naive Bayes (NB), BN, TAN, BAN, and Markov Blanket BN. In the second method, instead of learning on each classifier separately as in the former, we combine these classifiers by a voting ensemble. The experimental results on 20 benchmark imbalanceddatasets collected from the UCI repository show that our methods significantly outperform the baseline NB. These methods also perform as good as the state-of-the-art sampling methods and significantly better in certain

cases.

Downloads

Downloads

Published

How to Cite

Issue

Section

License

Vietnam Journal of Sciences and Technology (VJST) is an open access and peer-reviewed journal. All academic publications could be made free to read and downloaded for everyone. In addition, articles are published under term of the Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA) Licence which permits use, distribution and reproduction in any medium, provided the original work is properly cited & ShareAlike terms followed.

Copyright on any research article published in VJST is retained by the respective author(s), without restrictions. Authors grant VAST Journals System a license to publish the article and identify itself as the original publisher. Upon author(s) by giving permission to VJST either via VJST journal portal or other channel to publish their research work in VJST agrees to all the terms and conditions of https://creativecommons.org/licenses/by-sa/4.0/ License and terms & condition set by VJST.

Authors have the responsibility of to secure all necessary copyright permissions for the use of 3rd-party materials in their manuscript.

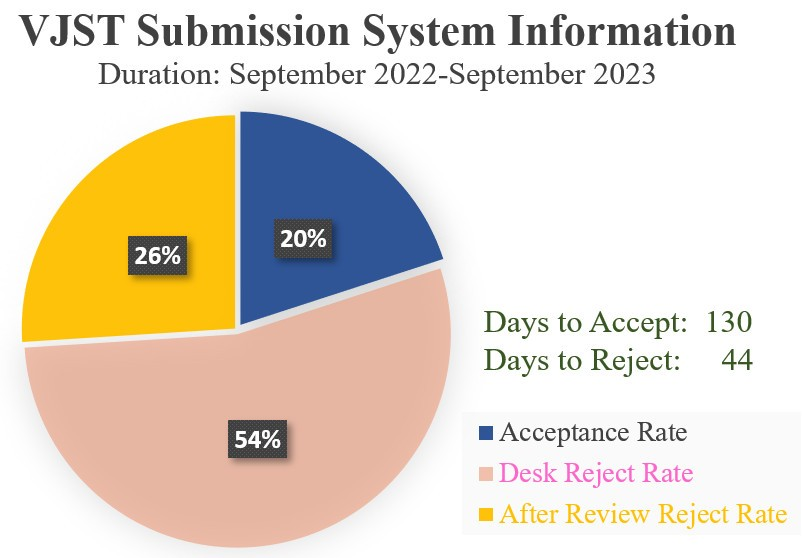

Vietnam Journal of Science and Technology (VJST) is pleased to notice:

Vietnam Journal of Science and Technology (VJST) is pleased to notice: