A method to utilize prior knowledge for extractive summarization based on pre-trained language models

Author affiliations

DOI:

https://doi.org/10.15625/2525-2518/20241Keywords:

Text summurization, knowledge injection, pre-trained models, Transformer, BERTAbstract

This paper presents a novel model for extractive summarization that integrates context representation from a pre-trained language model (PLM), such as BERT, with prior knowledge derived from unsupervised learning methods. Sentence importance assessment is crucial in extractive summarization, with prior knowledge providing indicators of sentence importance within a document. Our model introduces a method for estimating sentence importance based on prior knowledge, complementing the contextual representation offered by PLMs like BERT. Unlike previous approaches that primarily relied on PLMs alone, our model leverages both contextual representation and prior knowledge extracted from each input document. By conditioning the model on prior knowledge, it emphasizes key sentences in generating the final summary. We evaluate our model on three benchmark datasets across two languages, demonstrating improved performance compared to strong baseline methods in extractive summarization. Additionally, our ablation study reveals that injecting knowledge into certain first attention layers yields greater benefits than others. The model code is publicly available for further exploration.

Downloads

References

[1] Nenkova, A., McKeown, K., et al.: Automatic summarization. Foundations and Trends® in Information Retrieval 5(2–3), 103–233 (2011)

[2] Luhn, H.P.: The automatic creation of literature abstracts. IBM Journal of Research and Development 2(2), 159–165 (1958). https://doi.org/10. 1147/rd.22.0159

[3] Ermakova, L., Cossu, J.V., Mothe, J.: A survey on evaluation of summarization methods. Information processing & management 56(5), 1794–1814 (2019)

[4] Zhong, M., Liu, P., Chen, Y., Wang, D., Qiu, X., Huang, X.-J.: Extractive summarization as text matching. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 6197–6208 (2020)

[5] Ghadimi, A., Beigy, H.: Sgcsumm: An extractive multi-document summarization method based on pre-trained language model, submodularity, and graph convolutional neural networks. Expert Systems with Applications 215, 119308 (2023)

[6] Akiyama, K., Tamura, A., Ninomiya, T.: Hie-bart: Document summarization with hierarchical bart. In: Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Student Research Workshop, pp. 159–165 (2021)

[7] Qiu, Y., Cohen, S.B.: Abstractive summarization guided by latent hierarchical document structure. arXiv preprint arXiv:2211.09458 (2022)

[8] Erkan, G., Radev, D.R.: Lexrank: Graph-based lexical centrality as salience in text summarization. Journal of artificial intelligence research 22, 457–479 (2004)

[9] Nguyen, M.-T., Tran, D.-V., Nguyen, L.-M., Phan, X.-H.: Exploiting user posts for web document summarization. ACM Transactions on Knowledge Discovery from Data (TKDD) 12(4), 1–28 (2018)

[10] Nguyen, M.-T., Cuong, T.V., Hoai, N.X., Nguyen, M.-L.: Utilizing user posts to enrich web document summarization with matrix cofactorization. In: Proceedings of the 8th International Symposium on Information and Communication Technology, pp. 70–77 (2017)

[11] Lin, H., Bilmes, J.: A class of submodular functions for document summarization. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, pp. 510–520 (2011)

[12] Liu, Y.: Fine-tune BERT for Extractive Summarization (2019)

[13] Shen, D., Sun, J.-T., Li, H., Yang, Q., Chen, Z.: Document summarization using conditional random fields. In: IJCAI, vol. 7, pp. 2862–2867 (2007)

[14] Cao, Z., Wei, F., Dong, L., Li, S., Zhou, M.: Ranking with recursive neural networks and its application to multi-document summarization. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 29 (2015)

[15] Cao, Z., Wei, F., Li, S., Li, W., Zhou, M., Wang, H.: Learning summary prior representation for extractive summarization. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 2: Short Papers), pp. 829–833 (2015)

[16] Lewis, M., Liu, Y., Goyal, N., Ghazvininejad, M., Mohamed, A., Levy, O., Stoyanov, V., Zettlemoyer, L.: Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 7871–7880 (2020)

[17] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention Is All You Need (2017)

[18] Zhang, X., Wei, F., Zhou, M.: Hibert: Document level pre-training of hierarchical bidirectional transformers for document summarization. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 5059–5069 (2019)

[19] Zhong, M., Liu, P., Wang, D., Qiu, X., Huang, X.-J.: Searching for effective neural extractive summarization: What works and what’s next. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 1049–1058 (2019)

[20] Liu, Y., Lapata, M.: Text Summarization with Pretrained Encoders (2019)

[21] Xu, J., Gan, Z., Cheng, Y., Liu, J.: Discourse-aware neural extractive text summarization. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 5021–5031 (2020)

[22] Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (2019)

[23] Xia, T., Wang, Y., Tian, Y., Chang, Y.: Using prior knowledge to guide bert’s attention in semantic textual matching tasks. In: Proceedings of the Web Conference 2021, pp. 2466–2475 (2021)

[24] Christian, H., Agus, M., Suhartono, D.: Single document automatic text summarization using term frequency-inverse document frequency (tf-idf). ComTech: Computer, Mathematics and Engineering Applications 7, 285 (2016). https://doi.org/10.21512/comtech.v7i4.3746

[25] Km, S., Soumya, R.: Text summarization using clustering technique and svm technique 10, 25511–25519 (2015)

[26] Steinberger, J., Jezek, K.: Using latent semantic analysis in text summarization and summary evaluation. (2004)

[27] Yadav, A., Maurya, A.K., Ranvijay, R., Yadav, R.: Extractive text summarization using recent approaches: A survey. Ing´enierie des syst`emes d information 26, 109–121 (2021). https://doi.org/10.18280/isi.260112

[28] Sutskever, I., Vinyals, O., Le, Q.V.: Sequence to sequence learning with neural networks. Advances in neural information processing systems 27 (2014)

[29] Reimers, N., Gurevych, I.: Sentence-bert: Sentence embeddings using siamese bert-networks. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp. 3982–3992 (2019)

[30] Nguyen, V.-H., Nguyen, T.-C., Nguyen, M.-T., Hoai, N.X.: Vnds: A vietnamese dataset for summarization. In: 2019 6th NAFOSTED Conference on Information and Computer Science (NICS), pp. 375–380 (2019). https://doi.org/10.1109/NICS48868.2019.9023886

[31] Lee, D., Seung, H.S.: Algorithms for non-negative matrix factorization. Advances in neural information processing systems 13 (2000)

[32] Carbonell, J., Goldstein, J.: The use of mmr, diversity-based reranking for reordering documents and producing summaries. In: Proceedings of the 21st Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 335–336 (1998)

[33] Nallapati, R., Zhou, B., dos santos, C.N., Gulcehre, C., Xiang, B.: Abstractive Text Summarization Using Sequence-to-Sequence RNNs and Beyond (2016)

[34] Kornilova, A., Eidelman, V.: BillSum: A corpus for automatic summarization of US legislation. In: Proceedings of the 2nd Workshop on New Frontiers in Summarization, pp. 48–56. Association for Computational Linguistics, Hong Kong, China (2019). https://doi.org/10.18653/ v1/D19-5406. https://aclanthology.org/D19-5406

[35] Nguyen, D.Q., Nguyen, A.-T.: Phobert: Pre-trained language models for vietnamese. In: Findings of the Association for Computational Linguistics: EMNLP 2020, pp. 1037–1042 (2020)

[36] Lin, C.-Y., Hovy, E.: Automatic evaluation of summaries using n-gram co-occurrence statistics. In: Proceedings of the 2003 Human Language Technology Conference of the North American Chapter of the Association for Computational Linguistics, pp. 150–157 (2003)

[37] Nenkova, A., Vanderwende, L.: The impact of frequency on summarization. Microsoft Research, Redmond, Washington, Tech. Rep. MSR-TR-2005 101 (2005)

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

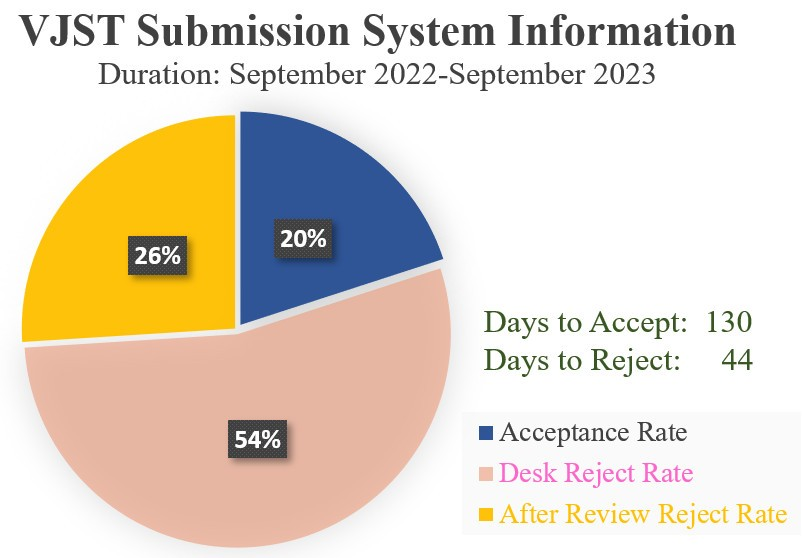

Vietnam Journal of Sciences and Technology (VJST) is an open access and peer-reviewed journal. All academic publications could be made free to read and downloaded for everyone. In addition, articles are published under term of the Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA) Licence which permits use, distribution and reproduction in any medium, provided the original work is properly cited & ShareAlike terms followed.

Copyright on any research article published in VJST is retained by the respective author(s), without restrictions. Authors grant VAST Journals System a license to publish the article and identify itself as the original publisher. Upon author(s) by giving permission to VJST either via VJST journal portal or other channel to publish their research work in VJST agrees to all the terms and conditions of https://creativecommons.org/licenses/by-sa/4.0/ License and terms & condition set by VJST.

Authors have the responsibility of to secure all necessary copyright permissions for the use of 3rd-party materials in their manuscript.

Vietnam Journal of Science and Technology (VJST) is pleased to notice:

Vietnam Journal of Science and Technology (VJST) is pleased to notice: