RGTranCNet: Effective image captioning model using cross-attention and semantic knowledge

Author affiliations

DOI:

https://doi.org/10.15625/2525-2518/22381Keywords:

Image captioning, Cross-attention mechanism, Transformer, ConceptNet knowledge baseAbstract

Generating captions for images is a key endeavour that connects visual processing and linguistic analysis. However, techniques relying on long short-term memory (LSTM) units and conventional attention systems face restrictions in managing intricate interconnections and supporting effective parallel processing. Additionally, precisely depicting elements absent from the training data presents a significant challenge. To overcome these obstacles, the present research introduces an innovative framework for image description, employing a Transformer architecture augmented by cross-attention processes and semantic insights sourced from ConceptNet. This setup follows an encoder-decoder paradigm, where the encoder derives features from object areas and assembles a graph of associations to depict the visual scene. At the same time, the decoder merges visual and semantic aspects through cross-attention to produce captions that are both accurate and varied. The inclusion of ConceptNet-derived knowledge enhances precision, particularly when handling items not encountered during training. Tests conducted on the standard MS COCO dataset reveal that this approach outperforms recent state-of-the-art approaches. Moreover, the semantic integration strategy outlined here can be readily adapted to alternative image captioning systems.

Downloads

References

Anderson, P., He, X., Buehler, C., Teney, D., Johnson, M., Gould, S., & Zhang, L. (2018). Bottom-up and top-down attention for image captioning and visual question answering. Salt Lake City, Utah, USA.

Banerjee, S., & Lavie, A. (2005). METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. Ann Arbor, Michigan, USA.

Chen, S., Jin, Q., Wang, P., & Wu, Q. (2020). Say as you wish: Fine-grained control of image caption generation with abstract scene graphs. Seattle, Washington, USA.

Hafeth, D. A., Kollias, S., & Ghafoor, M. (2023). Semantic representations with attention networks for boosting image captioning. IEEE Access, 11, 40230-40239. https://doi.org/10.1109/ACCESS.2023.3268744

Hamilton, W. L., Ying, Z., & Leskovec, J. (2017). Inductive representation learning on large graphs. Long Beach, California, USA.

Hendricks, L. A., Venugopalan, S., Rohrbach, M., Mooney, R., Saenko, K., & Darrell, T. (2016). Deep compositional captioning: Describing novel object categories without paired training data. Las Vegas, Nevada, USA.

Huang, L., Wang, W., Chen, J., & Wei, X.-Y. (2019). Attention on attention for image captioning. Seoul, Korea.

Jamil, A. (2024). Deep Learning Approaches for Image Captioning: Opportunities, Challenges and Future Potential. IEEE Access, 12, 24337-24366. https://doi.org/10.1109/ACCESS.2024.3365528

Karpathy, A., & Fei-Fei, L. (2015). Deep visual-semantic alignments for generating image descriptions. Boston, Massachusetts, USA.

Kavitha, R. (2023). Deep learning-based image captioning for visually impaired people.

Li, Z., Su, Q., & Chen, T. (2023). External knowledge-assisted Transformer for image captioning. Image and Vision Computing, 140, 104864. https://doi.org/10.1016/j.imavis.2023.104864

Li, Z., Zhang, W., Ma, H., & Chen, S. (2023). Modeling graph-structured contexts for image captioning. Image and Vision Computing, 129, 104591. https://doi.org/10.1016/j.imavis.2022.104591

Lin, C.-Y. (2004). ROUGE: A package for automatic evaluation of summaries. Barcelona, Spain.

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., & Zitnick, C. L. (2014). Microsoft COCO: Common objects in context. Zurich, Switzerland.

Lin, Y.-J., Tseng, C.-S., & Hung, Y.-K. (2024). Relation-Aware Image Captioning with Hybrid-Attention for Explainable Visual Question Answering. Journal of Information Science and Engineering, 40(3), 479-494.

Papineni, K., Roukos, S., Ward, T., & Zhu, W.-J. (2002). BLEU: A method for automatic evaluation of machine translation. Philadelphia, Pennsylvania, USA.

Patwari, N., & Naik, D. (2021). En-de-cap: An encoder decoder model for image captioning. Erode, India.

Pavlopoulos, J., Kougia, V., & Androutsopoulos, I. (2019). A survey on biomedical image captioning. Minneapolis, Minnesota.

Ramos, L., Pereira, P., & Figueiredo, M. A. T. (2024). A study of convnext architectures for enhanced image captioning. IEEE Access, 12, 17061-17074. https://doi.org/10.1109/ACCESS.2024.3356551

Speer, R., Chin, J., & Havasi, C. (2017). ConceptNet 5.5: An open multilingual graph of general knowledge. San Francisco, California, USA.

Szafir, D., & Szafir, D. A. (2021). Connecting human-robot interaction and data visualization. Boulder, Colorado, USA.

Thinh, N. V., Lang, T. V., & Thanh, V. T. (2022). A Method of Automatic Image Captioning Based on Scene Graph and LSTM Network. Ha Noi, Vietnam.

Thinh, N. V., Lang, T. V., & Thanh, V. T. (2023). Automatic image captioning based on object detection and attention mechanism. Da Nang, Vietnam.

Thinh, N. V., Lang, T. V., & Thanh, V. T. (2024). OD-VR-CAP: Image captioning based on detecting and predicting relationships between objects. Journal of Computer Science and Cybernetics, 40(4), 355-376. https://doi.org/10.15625/1813-9663/20929

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. Long Beach, California, USA.

Vedantam, R., Lawrence Zitnick, C., & Parikh, D. (2015). CIDEr: Consensus-based image description evaluation. Boston, Massachusetts, USA.

Verma, A., Yadav, A. K., Kumar, M., & Yadav, D. (2024). Automatic image caption generation using deep learning. Multimedia Tools and Applications, 83(2), 5309-5325. https://doi.org/10.1007/s11042-023-15555-y

Vinyals, O., Toshev, A., Bengio, S., & Erhan, D. (2015). Show and tell: A neural image caption generator. Boston, Massachusetts, USA.

Wang, Y., Xu, J., & Sun, Y. (2022). End-to-end transformer based model for image captioning. Virtual Conference.

Xie, T., Sun, P., & Chen, H. (2023). Bi-LS-AttM: A Bidirectional LSTM and Attention Mechanism Model for Improving Image Captioning. Applied Sciences, 13(13), 7916. https://doi.org/10.3390/app13137916

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhutdinov, R., Zemel, R., & Bengio, Y. (2015). Show, attend and tell: Neural image caption generation with visual attention. Lille, France.

Xu, N., Liu, A.-A., Nie, W., Su, Y., & Wong, Y. (2019). Scene graph captioner: Image captioning based on structural visual representation. Journal of Visual Communication and Image Representation, 58, 477-485. https://doi.org/10.1016/j.jvcir.2018.12.027

Yan, J., Shu, X., Wen, Z., & Wang, Z. (2022). Caption TLSTMs: combining transformer with LSTMs for image captioning. International Journal of Multimedia Information Retrieval, 11(2), 111-121. https://doi.org/10.1007/s13735-022-00228-7

Yang, X., Liu, H., Nie, D., & Li, B. (2023). Context-aware transformer for image captioning. Neurocomputing, 549, 126440. https://doi.org/10.1016/j.neucom.2023.126440

Zhou, Y., Sun, Y., & Honavar, V. G. (2019). Improving Image Captioning by Leveraging Knowledge Graphs. Waikoloa Village, Hawaii, USA.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Vietnam Journal of Sciences and Technology (VJST) is an open access and peer-reviewed journal. All academic publications could be made free to read and downloaded for everyone. In addition, articles are published under term of the Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA) Licence which permits use, distribution and reproduction in any medium, provided the original work is properly cited & ShareAlike terms followed.

Copyright on any research article published in VJST is retained by the respective author(s), without restrictions. Authors grant VAST Journals System a license to publish the article and identify itself as the original publisher. Upon author(s) by giving permission to VJST either via VJST journal portal or other channel to publish their research work in VJST agrees to all the terms and conditions of https://creativecommons.org/licenses/by-sa/4.0/ License and terms & condition set by VJST.

Authors have the responsibility of to secure all necessary copyright permissions for the use of 3rd-party materials in their manuscript.

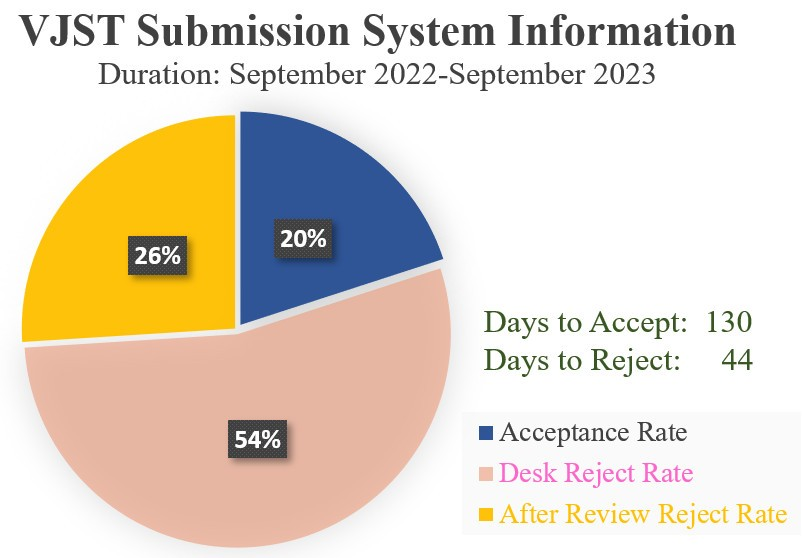

Vietnam Journal of Science and Technology (VJST) is pleased to notice:

Vietnam Journal of Science and Technology (VJST) is pleased to notice: